Export Fixes & UI Improvements

Fixes & stability

- Resolved export failures caused by unexpected video encodings on avatar and Getty asset files.

- Fixed an issue where the usage limit notification would occasionally fail to appear when credits were exhausted during generation.

- Refined the upgrade modal layout for improved display on smaller screens.

Image-to-Video Generation & Performance Improvements

Image-to-Video

We’ve added an "Image to video" option to the "Generate AI clip" workflow: start with an image and a prompt, and VideoGen picks the best model to animate it. You can choose to include audio in the generated video clip as well.

If you have an existing image that you would like to animate, you can select the image and use the "Convert to video" action in the right side panel.

Fixes & stability

- Resolved an issue where dragging an asset upward would incorrectly add extra layers to all sections.

- Fixed a bug that occasionally caused text-to-speech generations to be truncated prematurely.

- Improved mobile playback performance with better memory efficiency and support for multiple concurrent audio and video tracks.

Subscription & Voice Improvements

Better subscription upgrade flow

- Existing subscribers upgrading their plan are now redirected to a Stripe-hosted confirmation page for a smoother checkout experience.

Fixes & stability

- Resolved an issue where upgrades on subscriptions with an invalid payment method would fail silently.

- Fixed a bug that caused in-world text-to-speech to fail when scripts contained multiple consecutive line breaks.

- Corrected character timing alignment for text-to-speech generations using pronunciation replacements.

- Fixed an issue preventing Stripe customer data from being properly linked to some user accounts.

VideoGen 3.2.0: Faster Editing, New Annotation Tools, and More Reliable Voiceovers

New features

Workflows

Each new video now starts with a workflow. We are launching with 7 core workflows, with plans to add more in the future.

- Idea to video (recommended for beginners): Create a full video from an idea, notes, or uploaded files.

- Script to video: Turn a finished script into a narrated video with visuals.

- Voiceover to video: Upload a voiceover. AI will add b-roll and captions.

- Record: Record yourself, your screen, or both. Then, edit with AI tools built in, like auto captioning and translation

- Upload and edit: Upload media and directly edit, caption, or translate.

- Generate AI clip: Generate a 5-10s AI video clip from a prompt. Then, edit freely.

- Video editor: Edit from scratch with built-in media libraries and powerful AI tools.

Additionally, users can request a new workflow!

Text and animation improvements

- Inline text editing is now supported, making it faster to refine on-screen copy without breaking your workflow. Create bold/italics, add auto-formatted bullet lists, or add animations.

- Animations now have a duration slider and are more performant.

- Text animations have 4 modes: All line-by-line, word-by-word, or character-by-character.

Performance improvements

- Rebuilt the editor from the ground up to dramatically reduce lag and improve overall responsiveness.

- Optimized long timelines so creating and editing longer videos stays smooth from start to finish.

- Added full support for mobile playback in video previews.

New tools

- Added line and arrow annotation tools for quick callouts, highlights, and on-screen guidance.

- Added duplicate section option.

- Added box select option for multi-selecting layers in the timeline.

- Redesigned core flows to reduce clutter, surface the most important actions, and make navigation more intuitive.

Other improvements and fixes

- Changed primary button color from red to blue.

- Improved layouts in the script / wireframe storyboard view with automatic animations, better pacing, and more.

- Fixed an issue that could prevent avatars from being regenerated in certain cases.

- Added media style presets to the idea to video and script to video worfklows (Free stock, AI image, and Premium iStock).

- Added a more accessible multi-step product tour.

- Improved the post-upgrade onboarding flow to help you get back to creating faster.

- Improved voiceover reliability and resolved multiple edge-case failures.

- Optimized script writer to align better with user prompts.

- Improved project sharing reliability.

- Improved Import from URL to automatically add files to project.

- Additional bug fixes and UX polish across the editor.

New Voice Features

More Text-to-Speech voices

- We’ve added new voices to our TTS voice library.

Adjustable voice speed

- You can now edit Text-to-Speech speed to better match your video’s pacing.

Fixes & stability

- Resolved an issue that could cause video generations in Korean to fail.

- General bug fixes and performance improvements.

VideoGen 3.1.0: New features and improvements

New Features

Recording studio

- Record your webcam and screen directly inside the editor.

- Instantly upload recordings to your project timeline for seamless editing.

- Supports microphone input and multi-source capture for creators and teams.

New ways to edit sections

- Section Transitions: Smooth, customizable transitions between scenes.

- Section Backgrounds: Add solid colors, gradients, or media backgrounds to sections.

- Slides Tab: Quickly preview and manage all your sections like a deck of slides.

- Bulk Animations: Apply animations or effects to multiple assets in one action.

New asset actions

- Multi-select Property Control: Adjust position, timing, and animation for several assets simultaneously.

- Send to Front/Back: Reorder assets visually in your timeline with new context menu actions.

Content sources & redesigned outline

- Streamlined outline view for faster navigation and editing.

- Improved organization for text, media, and sections for a cleaner workflow.

Other improvements and fixes

- Fixed text-to-speech timing and pronunciation issues.

- Fixed flickering and flashing issues during export rendering.

- Improved voice-over regeneration and avatar playback reliability.

- Fixed delayed media loading and improved overall export stability.

- Smoothed out mobile preview playback and improved device handling.

- AI agent now consistently follows preset custom instructions and writing style.

Performance improvements

We've made significant performance improvements across the platform to deliver a faster and smoother editing experience:

- Timeline performance: Optimized timeline rendering and interactions for noticeably smoother playback and editing, even with complex multi-layer projects.

- Faster project loading: Reduced project load times, getting you into the editor faster.

Mobile preview improvements

The mobile preview now loads faster and runs more smoothly across all devices:

- Thumbnail assets for faster loading: The mobile preview now uses optimized thumbnail assets instead of full-resolution media, resulting in faster and more efficient loading times.

- Fixed editor crashes: Resolved issues that were causing the editor to crash on mobile devices.

- Improved audio playback: Enhanced audio playback reliability and synchronization in the mobile preview.

Improved export reliability

We've enhanced the export pipeline to make video exports more reliable and consistent. Exports now complete more successfully, with better error handling and recovery for edge cases.

Fixes regarding AI avatars

We've fixed a bug in our AI avatar generation that was blocking some users from seeing their avatar in the editor. We also made it easier to decide whether or not an avatar should be generated for a video by adding a "Narration mode" option in the "Overview" page.

Other improvements and fixes

- Added more fonts to the text editor, giving you greater flexibility in styling your video content.

- Renamed "Auto select" to "Auto replace" for clearer indication of the feature's behavior.

- Added context menus to frames, providing quick access to common frame actions.

- Added canvas display to frames for better visual preview of frame content.

- Added "Add voiceover" button to each section in the script that doesn't have a voiceover, making it easier to add narration where needed.

- Changed the design of sections in the timeline, moving the header to the top.

- Fixed bugs with the timeline including asset and section trimming issues.

- Fixed issues with timeline zooming that were affecting precise editing.

VideoGen 3.0: Agentic Video Editor

VideoGen 3.0 transforms our platform into a full-featured video editor powered by AI. This release introduces a redesigned three-stage creation flow (Overview, Outline, Editor), a brand-new interactive canvas, and an enhanced timeline editor. We've rebuilt our rendering pipeline for perfect preview-to-export accuracy, added a background task queue for reliable long-running operations, and expanded our stock library with over 12 million new assets. Together, these updates create a more visual, intuitive, and powerful editing experience.

New video creation flow: Overview → Outline → Editor

We introduced a redesigned video creation flow built around three stages — Overview, Outline, and Editor — to make project setup and AI collaboration more structured and predictable.

Overview page

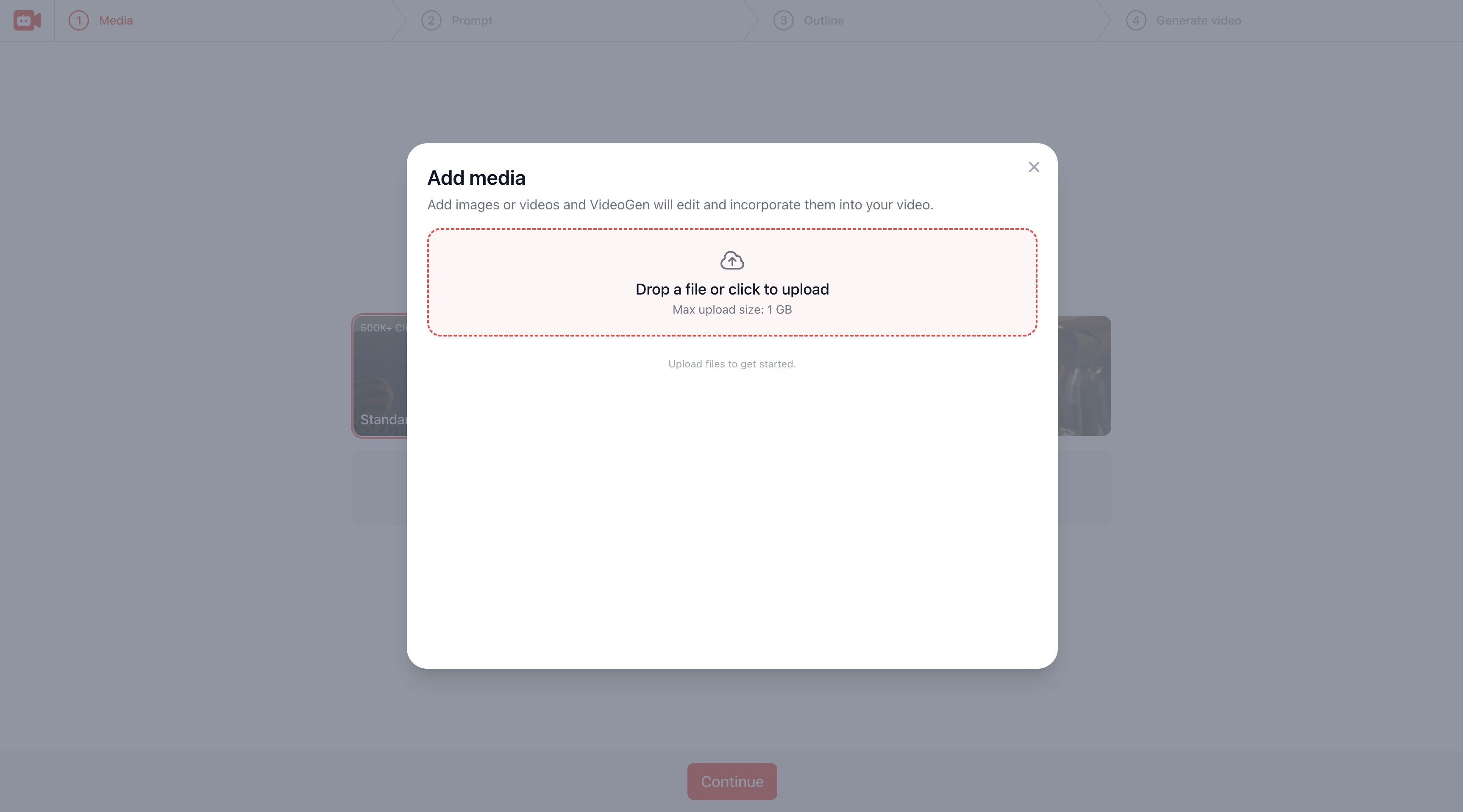

In the Overview page, you can upload images, videos, and audio files that you want the AI agent to use when generating your video. These assets serve as context for the AI — they can appear directly as visuals, help guide topic understanding, or be referenced when building the script and outline.

You can also specify your media sources, such as Free Stock, Wikimedia, iStock, AI Images, or Music. The AI agent will draw from these sources during generation, combining your uploaded assets with external visuals and audio to produce the most relevant media for each scene.

You also have more fine-grained controls, allowing you to define your aspect ratio, duration range, and language.

Outline page

After submitting your brief, the AI agent creates a structured outline that breaks your video into sections.

Each section is assigned a section type based on its audio handling:

- AI Voiceover: Generates an AI voiceover that narrates the section text.

- Transcribed Audio: Plays the original uploaded audio or video file, transcribed for editing.

- No Voiceover: Plays media as-is, without narration — often used for standalone clips or cinematic sequences.

You can review and edit these sections before moving into the editor.

Featured media

Within each section, you can set featured media, which takes priority over AI-selected b-roll. Featured media ensures specific visuals (like brand clips, demo videos, or uploaded footage) always appear in the final render for that section.

This new three-stage workflow creates a clearer separation between planning, structure, and editing — while giving the AI a stronger context for generating accurate visuals and narration.

New layout system

We introduced a new layout system that gives users more control over how text and visuals are arranged within each section.

Layouts determine the visual structure of a scene — how the title, subtitle, and media appear on screen — making it easier to match the presentation style to the content type.

The following layouts are now available in the editor:

- Auto: Lets the AI automatically select the most suitable layout based on your content and media.

- Full-Screen Media: Displays the visual asset at full frame.

- Simple Title: A clean layout with a title and subtitle on a neutral background.

- Hero Title: Places text over background media for impactful opening or transition moments.

- Split (Text Left / Text Right): Divides the screen between visuals and text, ideal for explainers and side-by-side comparisons.

- Lower Thirds: Overlays text at the bottom of the frame.

- Simple Text: Focuses on text-based content with a neutral background.

Interactive canvas with transform and drag controls

We've added a brand-new interactive canvas that allows direct manipulation of elements in your video:

- Drag: Click and drag to reposition elements precisely where you want them.

- Resize / Transform: Adjust size and scale interactively using handles.

- Snapping: Elements snap to guides and other objects for clean, aligned layouts.

- Animations: Add enter and exit animations to any element directly from the canvas.

These controls are powered by our unified rendering engine, meaning you can see exact, real-time changes to your final composition as you work.

This brings a much more visual and intuitive editing experience — you can now fine-tune positioning, scaling, and animations directly on the canvas without manually entering numbers.

Enhanced timeline editor

We've redesigned the timeline editor to give you more precise control over your video's timing and structure:

- Layer Management: Work with multiple layers of media, text assets, and shapes, all organized in a clear timeline view.

- Split: Divide clips at any point to create separate segments you can edit independently.

- Trim: Adjust the start and end points of any clip to control exactly what appears in your video.

- Reorder: Drag clips to rearrange them and change the sequence of your video.

The timeline syncs in real-time with the canvas preview, so every change you make is immediately reflected in your composition. You can scrub through the timeline to preview specific moments, making it easy to fine-tune transitions and timing across your entire video.

Re-implemented preview and export pipeline

We've overhauled our video rendering pipeline so that both preview and final export now run through the same underlying renderer. Previously, previews and exports used slightly different rendering code paths, which could occasionally lead to inconsistencies between what you saw while editing and the final output.

By consolidating them into a unified pipeline:

What you see is what you get – the export will now perfectly match your preview.

Rendering bugs are easier to track and fix because there’s only one rendering path to maintain.

We can introduce advanced editing features more quickly, since any improvements to the renderer apply to both preview and export automatically.

This foundation makes video editing more reliable today and faster to evolve in the future.

Background task queue

We implemented a new background task queue to make long tasks run more reliably, even if you close the tab before the process completes. The following actions will always be executed as background tasks:

- Generate outline

- Generate video

- Generate image

- Generate video clip

- Generate text-to-speech

- Generate sound effect

- Scan website

With minimal latency, automatic retries, and multiple fallbacks, this new system was built from the ground up to make the video generation experience as seamless as possible for our users.

Expanded stock library with >12M new assets

We’ve expanded the built-in stock media library with over 12 million new assets, including integrations with Pexels Images and Wikimedia Commons. This update provides broader visual coverage across topics, giving the AI agent access to both high-quality stock footage and educational media such as diagrams and public figures.

Other improvements and fixes

- Our AI agent will now automatically select an AI voice and avatar (if needed) based on the contents of your script and locale.

- We introduced a new "Deep Research" mode that allows the AI agent to perform multi-step reasoning to build outlines with greater depth.

- Added "Generate sound effect" media tool, which converts any prompt into a short sound effect audio asset.

- Content filter setting now applies to the stock library search filter, preventing unwanted inclusion of inappropriate assets.

- Included option on the "Team" page for a member to leave their team. Previously, members could only be removed by one of their admins.

- Fixed issue causing website scans to fail on any website without a meta description tag.

- Improved reliability of web scraping system to download images from the requested site.

- Enforced strict ordering of subscription updates in our backend to prevent occasional subscription data syncing issues.

- Website scans now always include the website's Open Graph image as the first entry in the list of scraped images.

- Users can now click a button in "Billing settings" to manually resync their subscription data.

- Increased rate limit on video exports for paid subscribers.

- Resolved issue causing storage limit errors to not be properly displayed to the user.

Better handling of inactive subscriptions

We overhauled our UX for dealing with failed subscription payments across the entire app. Now, when you attempt to use any paid feature while your subscription is inactive, a modal appears with clear instructions on how to reactivate your subscription. From here, you can view the incomplete invoice, manage your subscription, or contact our customer support team (with the relevant details of your account automatically included in the conversation). There is also a clear warning that your subscription is inactive on the main dashboard with a button to open this modal.

Other improvements and fixes

- Resolved compatibility issue causing some old projects to fail to generate.

- Added "Upload" and "Change" buttons to the right side panel for asset groups

- As a temporary fix to prevent accidental regenerations of title screens into stock footage, the "R" keyboard shortcut is disabled on the top layer.

- Polished UI and included examples of high-quality results for generative AI tools.

Project sharing

You can now share a copy of your project with your teammates. Click "Share" in top-right corner of the project editor, click "Share a copy", and then enter a comma-separated list of emails you'd like to share the project with. Each recipient will receive a full copy of your project in their inbox, allowing them to edit, generate, and export the video from their own account. Recipients who are not already part of your team will be added to your team upon acceptance of the invitation.

"Generate video clip" tool

We introduced a new "Generate video clip" tool that fully synthesizes a 5-10 second video based on a prompt. It may take a few minutes to generate, and results are best for well-structured prompts with specific subjects, actions, and settings. We are currently offering this tool exclusively to Business subscribers.

Other improvements and fixes

- Changed teams billing behavior to immediately charge prorated amount after adding a new member.

- Expanded our voice library with a larger variety of regional accents and dialects.

- Added "Create public view link" popover, allowing users to export and make the view link public in a single click.

- If a view link is made public while the export is pending, the Open Graph preview image is now updated to match the exported video upon completion of the export.

- Finalized migration of personal workspaces to teams, resolving several miscellaneous compatibility issues.

- Improved loading speed on the landing page with progressive loading of assets.

- Enabled content filter by default for all new users to prevent inappropriate image generations.

- Removed "AI" watermark on avatar video generations.

- Included an avatar button next to the voice button in the script editor, increasing visibility of our avatar generation feature.

- Clicking outside of a modal no longer causes popovers underneath the modal to close as well.

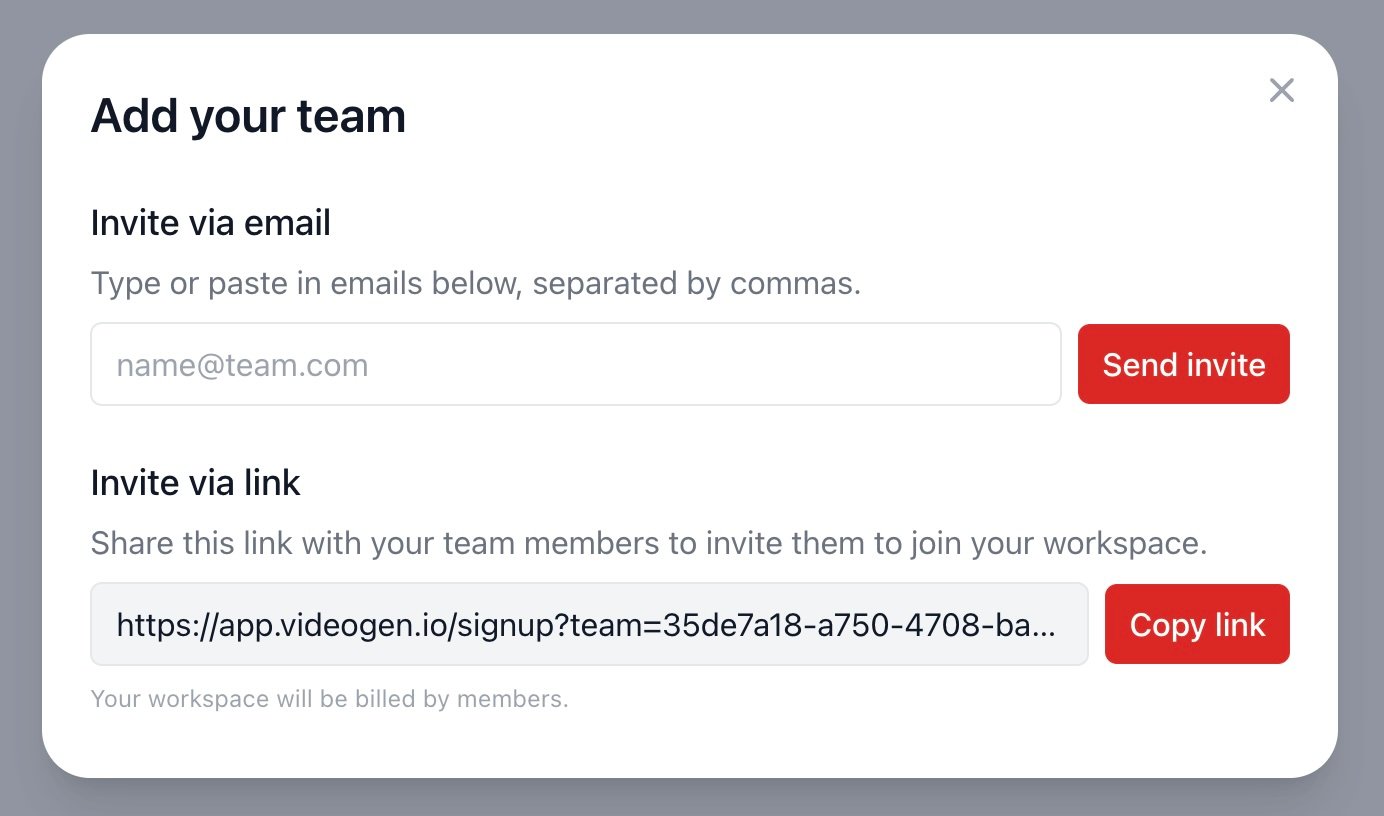

Personal workspaces are now teams

We converted all personal workspaces to single-member teams, making it easier than ever to create videos alongside your teammates. To invite your teammates, simply click "Invite teammates" on the top-right corner of the dashboard and enter their emails. To see a list of all of your team members and modify their permissions, visit the Teams page.

Other improvements and fixes

- Enhanced the music library with significantly more tracks across various genres.

- Added more checks to ensure that teammate additions and removals are always reflected instantly in the subscription quantity.

- Resolved issue causing infinite buffering on the video export view page.

- Fixed several small subscription processing bugs that were causing delays in subscription metadata updates.

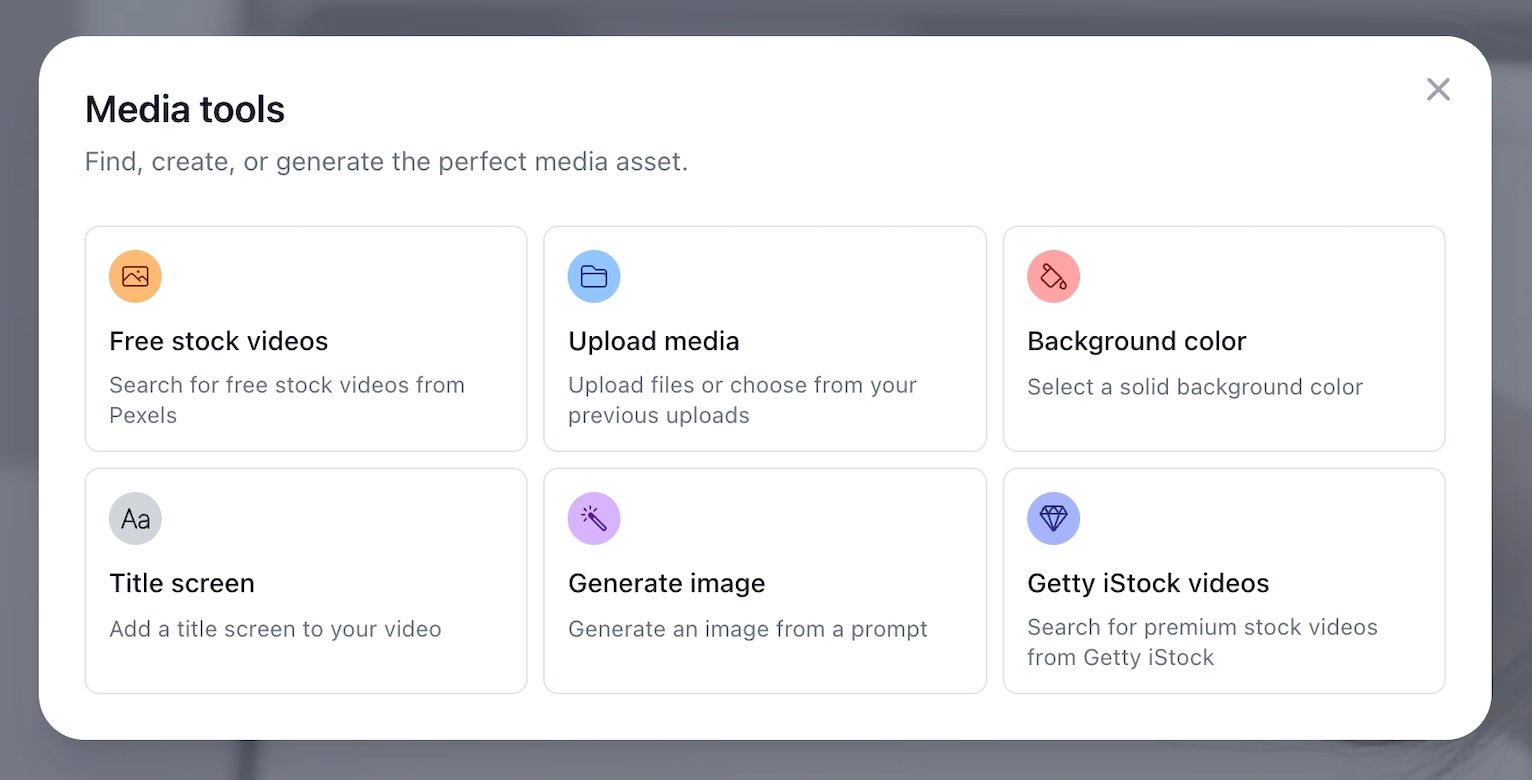

Media tools

Media tools are a set of flows to create and generate assets in the project editor. You can access these tools in the right side panel by clicking on the asset in the timeline. For a blank asset, the list of available tools will appear directly in the side bar. For a populated non-transcript asset, click "Replace" to replace the asset with the output of a media tool.

The following tools are currently available:

- Free stock videos

- Getty iStock videos

- Upload media

- Background color

- Title screen

- Generate image

Many more generative AI tools are coming soon!

Automatic music selection

All videos are now generated with a background music track to complement the content of your video. To power this system, we built an AI music agent that intelligently analyzes your video outline and automatically selects the perfect track from our music library. We also enhanced our music library with many more tracks to cover a wide range of different genres, moods, and tempos.

Other improvements and fixes

- Implemented more optimizations to video preview in the project editor, further reducing lag for long videos.

- Improved UX on title screen creation in the timeline, preventing accidental additions of overlays to projects.

- Patched issue causing English text to briefly flash before loading translations for non-English users.

- Added usage limit modal to clearly indicate how long you have to wait for your AI usage limit to reset.

- Fixed several small styling and layout shift issues on mobile.

Optimized timeline and preview

We reimplemented our timeline and preview to only load what's necessary for the visible portion of your video, allowing for optimized playback of long videos in the project editor. Previously, videos over 10 minutes long could be somewhat laggy.

Smarter AI agent for media editing

When you include your own media assets in the video generation form, VideoGen places each of these assets where they are most relevant to the voice-over script. We overhauled our system for this with a new AI agent that understands the content of each asset and intelligently edits together the entire b-roll track. The agent will also choose different animation styles depending on its categorization of the asset (e.g., screenshot, icon, infographic).

Other improvements and fixes

- Fixed issue causing a few users with multiple expired subscriptions to not see their most recent subscription.

- Changed default caption style to highlight the current spoken word, making the captions more engaging.

- Updated trimmer logic to properly render all asset trimmers within the bounds of the layer.

- Removed lag when trimming the start and end times of a background asset.

- Resolved bug causing some video exports with Getty iStock assets to fail.

- Increased chromatic variety of sequential generative images in generated video.

Avatars

![]()

You can now generate an AI avatar on top of your video to present your voice-over script with matching lip movements. Choose from our library of over 100 lifelike presenters to make your videos more engaging and personal. Avatars are currently only available to Business and Enterprise subscribers.

To add an AI avatar to an existing AI voice section, click on the speaker name, click on the avatar button at the top of the popover, select your favorite avatar presenter, and then click generate. Your avatar will be ready to preview and export within a few minutes!

Multi-layer timeline

We extended the timeline to have multiple layers to allow for more flexibility and customization in your videos. The bottom layer shows the background assets, which you can trim, split, replace, and rearrange. The middle layer shows the script asset, which corresponds to your AI voice and/or avatar. Finally, the top layer shows your title screen overlay, which you can customize in the "Theme" tab on the left side panel. In the timeline, you can also click on an asset to select it and view more advanced editing capabilities in the right side panel.

Other improvements and fixes

- Implemented various fixes to teams, allowing for seamless transfer between personal and teams subscriptions.

- Resolved bug causing newly generated sections in the project editor to occasionally repeat background assets already present in the video.

- Revamped text overlays to export significantly faster, especially for long videos.